The big picture: One thing that's become clear when it comes to Generative AI is that we're still in the early days of the technology. Major evolutions and refinements of existing products are going to be a standard part of the tech industry news cycle for some time to come.

At their annual re:Invent conference in Las Vegas, Amazon's Web Services (AWS) exemplified this trend with a series of product and service announcements primarily focused on enhancing their existing offerings rather than introducing completely new ones.

To be clear, there were a few genuinely new entries in the firehose of announcements that have become synonymous with AWS keynote speeches – particularly regarding foundation models. Even there, however, it could be argued that the focus was largely on rebranding or replacing existing products.

Part of the reason for this approach is that big tech companies like Amazon initially succeeded in defining and creating a high-level framework for enabling GenAI. Over time, however, it has become apparent that these tools and processes haven't fully met the needs of many customers.

Simply put, leveraging the capabilities of GenAI was, and in many cases still is, too complex for most organizations.

With this in mind, AWS focused on addressing these gaps at this year's re:Invent. They refined tools and bundled existing products and services to make significant strides toward simplifying the creation and deployment of GenAI technologies. These efforts were designed to accommodate companies across a wide range of technical sophistication.

Notably, they tackled this challenge across an expansive set of offerings, including custom silicon, foundation models, database enhancements, developer tools, and software platforms.

Trainium 2 Chip

Starting at the silicon level, new AWS CEO Matt Garman kicked off his keynote by highlighting the company's substantial investments in custom chips over the last decade. He pointed to the company's prescient decision to invest in Arm-based CPUs with its Graviton chip, sharing that their Graviton-based business is now larger than AWS's entire compute business was when Graviton launched. He then announced the general availability of the Trainium 2 chip and EC2 compute instances optimized for AI training and inference workloads using those chips.

Taking this a step further, Garman claimed that Trainium 2 represents the first viable alternative to Nvidia GPUs – most notably at a significantly lower cost of operation. While the validity of this claim remains to be seen, initial discussions around the chip's architecture suggest it's a significant improvement over the first-generation Trainium.

Interestingly, Garman also revealed early details about Trainium 3, signaling the company's deep commitment to ongoing silicon development. Despite these custom silicon efforts, AWS reaffirmed Nvidia's role as a critical partner by announcing new EC2 instances with Nvidia's Blackwell GPUs, which are set to debut soon.

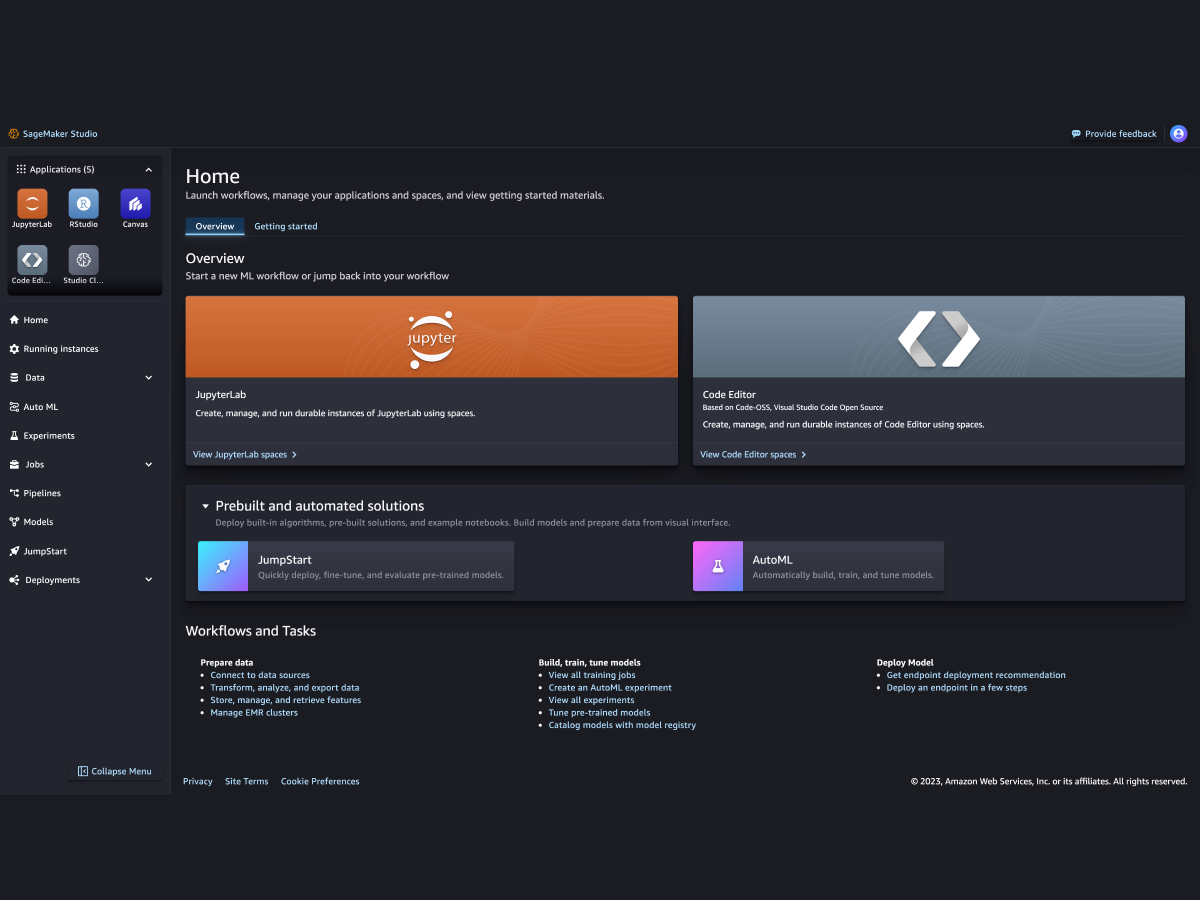

Of course, a critical component of any GenAI compute system is the software used to build and fine-tune models and applications that run on this hardware. In this regard, Garman introduced numerous enhancements to Amazon's SageMaker and Bedrock platforms, including the launch of SageMaker Studio, which consolidates previously independent AWS services into a unified user interface.

Sagemaker Studio

Building on its legacy as a tool for data scientists and early AI/ML models, SageMaker has become increasingly important in the GenAI era, enabling the development, training, and fine-tuning of foundation models. Unsurprisingly, SageMaker Studio now offers enhancements that fully leverage the new capabilities of Trainium 2, positioning the combination as a competitive alternative to Nvidia's CUDA and GPUs.

Enhancements to Bedrock – a platform tailored for GenAI application developers looking to work with existing foundation models – include new well-known models and the introduction of a Bedrock Marketplace for broader model selection.

Two particularly intriguing Bedrock additions are its model distillation feature and a method for reducing hallucinations. Bedrock Distillation allows for the compression of large frontier models – such as a 405-billion-parameter Llama model – into something as compact as an 8-billion-parameter version using specialized customization techniques.

While this process shares similarities with Retrieval Augmented Generation (RAG), it employs distinct methods that may yield even more effective results. Meanwhile, Bedrock Guardrails now include an Automated Reasoning check, a mathematically verifiable technique designed to substantially reduce hallucinations in GenAI outputs. While details on how it worked were sparse, it certainly sounded like a potentially very important breakthrough.

Bedrock also incorporates some fine-tuning capabilities previously exclusive to SageMaker but presented at a higher level of abstraction. While this improves Bedrock's versatility, it can create overlap and confusion regarding which tool is best suited for a specific task or user type.

Amazon faced the same kind of confusion over the role of Sagemaker, Bedrock and their Q agent capabilities when they first introduced Q at last year's re:Invent (see "The Amazon AWS GenAI Strategy Comes with a Big Q" for more). Since then, I believe they've improved the positioning of each option in their development stack, but it's still extremely complex and worthy of even more message simplification and clarification.

To better address the challenges that companies have in organizing their data for ingestion into GenAI foundation models, AWS introduced notable enhancements to their S3 storage and database offerings. Highlights include support for managed Apache Iceberg data tables to accelerate data lake analytics and the automated creation of searchable metadata. These, along with other announcements, underscore AWS's commitment to improving data preparation and organization.

For developers, AWS unveiled Amazon Q Developer, a suite of AI-powered capabilities to assist with writing new code, modernizing legacy Java and mainframe code, automating code documentation, and more.

Two of the biggest surprises from the AWS keynote were the return of former AWS CEO (and now Amazon CEO) Andy Jassy and the unveiling of the company's new foundation models, branded Nova. This range includes four tiers of multimodal models alongside specialized models for image and video creation.

Together with the Trainium chip, SageMaker and Bedrock enhancements, and improved database tools, the Nova models form a comprehensive GenAI portfolio. AWS believes this positions them as a leading full-solution provider for GenAI.

That said, Nova's introduction raises questions. The Nova models replace Amazon's Titan models, which were heralded not long ago as a key part of their AI strategy. This sudden shift may muddy the messaging for companies and developers already working with Titan. However, discussions with AWS representatives suggest that Nova represents a significant leap forward in architecture and performance. While the decision to pivot from Titan may raise eyebrows, it reflects the fast-moving and dynamic nature of the GenAI space.

As I walked away from the event, I couldn't help but be impressed at the comprehensive range of enhancements that AWS has made to its GenAI tools and services. While the technology will undoubtedly continue to evolve, as we migrate from the era of GenAI proof-of-concepts to enterprise-wide GenAI deployments, having access to full suite of tools from a major cloud computing provider that addresses a number of early pain points is bound to be a game-changer.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech