Forward-looking: Challenging conventional wisdom, Nvidia CEO Jensen Huang said that his company's AI chips are outpacing the historical performance gains set by Moore's Law. This claim, made during his keynote address at CES in Las Vegas and repeated in an interview, signals a potential paradigm shift in the world of computing and artificial intelligence.

For decades, Moore's Law, coined by Intel co-founder Gordon Moore in 1965, has been the driving force behind computing progress. It predicted that the number of transistors on computer chips would roughly double every year, leading to exponential growth in performance and plummeting costs. However, this law has shown signs of slowing down in recent years.

Huang, however, painted a different picture of Nvidia's AI chips. "Our systems are progressing way faster than Moore's Law," he told TechCrunch, pointing to the company's latest data center superchip, which is claimed to be more than 30 times faster for AI inference workloads than its predecessor.

Huang attributed this accelerated progress to Nvidia's comprehensive approach to chip development. "We can build the architecture, the chip, the system, the libraries, and the algorithms all at the same time," he explained. "If you do that, then you can move faster than Moore's Law, because you can innovate across the entire stack."

This strategy has apparently yielded impressive results. Huang claimed that Nvidia's AI chips today are 1,000 times more advanced than what the company produced a decade ago, far outstripping the pace set by Moore's Law.

Rejecting the notion that AI progress is stalling, Huang outlined three active AI scaling laws: pre-training, post-training, and test-time compute. He pointed to the importance of test-time compute, which occurs during the inference phase and allows AI models more time to "think" after each question.

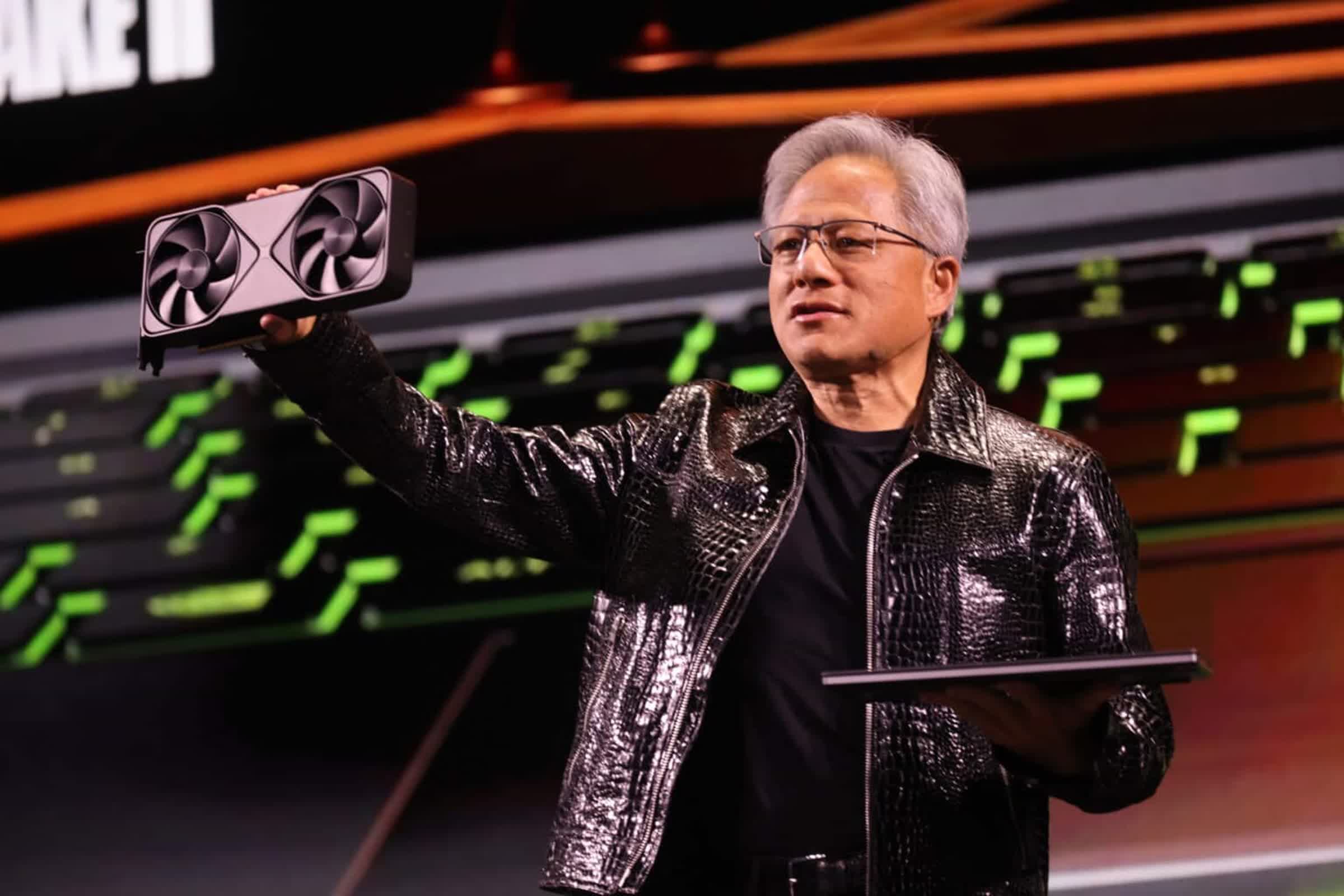

During his CES keynote, Huang showcased Nvidia's latest data center superchip, the GB200 NVL72, touting its 30 to 40 times faster performance in AI inference workloads compared to its predecessor, the H100. This leap in performance, Huang argued, will make expensive AI reasoning models like OpenAI's o3 more affordable over time.

"The direct and immediate solution for test-time compute, both in performance and cost affordability, is to increase our computing capability," Huang said. He added that in the long term, AI reasoning models could be used to create better data for the pre-training and post-training of AI models.

Nvidia's claims come at a crucial time for the AI industry, with AI companies such as Google, OpenAI, and Anthropic relying on its chips and their advancements in performance. Moreover, as the focus in the tech industry shifts from training to inference, questions have arisen about whether Nvidia's expensive products will maintain their dominance. Huang's claims suggest that Team Green is not only keeping pace but setting new standards in inference performance and cost-effectiveness.

While the first versions of AI reasoning models like OpenAI's o3 have been expensive to run, Huang expects the trend of plummeting AI model costs to continue, driven by computing breakthroughs from hardware companies like Nvidia.

Jensen Huang claims Nvidia's AI chips are outpacing Moore's Law