What just happened? The big surprise out of CES 2025 is that AMD hasn't fully launched new RDNA4 GPUs at the show, instead deciding to merely "preview" the new architecture and a couple of graphics card models. As of writing, AMD has officially announced the RDNA4 graphics architecture, Radeon RX 9070 series of GPUs, ML-powered FSR 4 upscaling, and new Ryzen 9000 X3D desktop CPUs. We've got all the information for you here, plus a few thoughts on what AMD has shown off so far.

AMD has announced new Radeon RX 9070 XT and Radeon RX 9070 graphics cards, but has done so without providing any specifications, any performance figures, pricing, or a release date, outside of a vague "Q1 2025." This is a pretty disappointing given that many were expecting full product details from AMD's RDNA4 launch – you'll just have to keep waiting to learn everything about these models.

It really seems like AMD is not willing to commit to providing concrete information ahead of Nvidia's GeForce 50 series announcement, which we're expecting later today as well. This gives AMD more flexibility to respond to Nvidia's new generation, for better or worse. If they really want to disappoint gamers this generation and ensure RDNA4 is a flop, they would wait for RTX 50 series pricing and make their equivalent models slightly cheaper – a strategy that categorically didn't work with the previous generation and absolutely should not be attempted again. But who knows, we might be in for a repeat anyway.

A more positive perspective is that AMD might be waiting to see what Nvidia does to ensure their products are highly competitive and to better market their cards in comparison to Nvidia. If Nvidia decides to give us a decent price-to-performance improvement this generation, then AMD could respond with even more aggressive pricing later or make minor configuration adjustments. Realistically, the most important factor for AMD is how good RDNA4 looks in comparison to Nvidia's new products.

New Radeon RX 9070 GPUs

AMD is announcing the Radeon RX 9070 XT and RX 9070, which will be available in Q1 2025 from a range of partners, including the usual brands like Asus, Gigabyte, Sapphire, and PowerColor. MSI will not be producing RDNA4 products, having exited that market during the RDNA3 generation, though they haven't provided an official reason as to why. Our understanding is that there's some sort of relationship issue at the moment between AMD and MSI.

You can see a variety of partner models in the image at the top of this story, but AMD also seems to have snuck in a new reference model into the picture, in both a three- and two-fan design. This reference design was previously revealed through an AMD ad a few weeks ago.

As for the RDNA4 architecture, AMD has made only some light disclosures at CES. RDNA4 will be built on the same family of TSMC nodes as RDNA3 – in this case for RDNA4, a 4nm node, whereas for RDNA3 the compute die was built on N5. The expectation is that RDNA4 uses a different design to RDNA3, no longer separating the GPU cores and memory controller into separate dies on separate nodes, but there's been no confirmation of exactly what this looks like.

AMD is touting a wide range of architectural improvements with RDNA4. The headlining feature is an "optimized" compute unit, AMD told us in a briefing that this includes a "significant" change to the architecture, higher IPC, and increased frequency – which sounds like a little more than a mere optimization. This suggests that RDNA4 should see a notable performance improvement from a similar number of compute units to RDNA3 – RDNA3 models typically clocked between 2.1 and 2.3 GHz for their game clock, so pushing that up in combination with better IPC should lead to a healthy performance gain.

In addition, RDNA4 features a "massive" change to the AI aspects of the architecture, and while AMD kept this vague, they did say they've added a number of capabilities to the design. The ray tracing engine has been overhauled for improved performance, but we have no numbers to back this up yet. There's also a second-gen "Radiance Display Engine," suggesting further improvements to display connectivity relative to RDNA3.

The media encoder is "substantially" better according to AMD, and they actually provided some specifics to back up this claim. A footnote reveals this is comparing H.264 image quality using VMAF quality scores between RDNA3 and RDNA4 in three games (Borderlands 3, Far Cry 6, and Watch Dogs Legion) at 1080p and 4K. H.264 was a massive weak point for AMD's media encoder, so it's encouraging to see these claims targeting improvement in that area given H.264 is still widely used. Hopefully, there are no hardware bugs with the encoder this time, like outputting AV1 at 1082p instead of 1080p, a real issue with RDNA3.

AMD has not provided concrete performance figures for RDNA4 just yet, however they shared a teaser on this slide talking about Radeon branding. In the dark gray, we have existing generations like the GeForce 40 series and RX 7000 series, and a dashed line indicating where AMD believes the RTX 5000 series will fall – a bit faster than current models. Then on the right, we have the positioning for both the RX 9070 and RX 9060 series, and yes, there's a mention here of the 9060 with no further information.

Where the RX 9070 series matches up with current cards is around the level of the RTX 4070 Ti and RX 7900 XT for the highest-tier 9070 model, which has long been rumored as the performance ceiling for RDNA4.

AMD is suggesting the lower RX 9070 model could be in the range of the RX 7800 XT, and then we'll see the RX 9060 series offering performance at best between the 7700 XT and 7800 XT, and for the lowest-tier cards, around the 7600 XT.

AMD says this new naming scheme was chosen for two reasons. Firstly, to match their direct competitor, essentially copying Nvidia to make it easier for people to figure out how AMD cards compare...

AMD says this new naming scheme was chosen for two reasons. First, to match their direct competitor, essentially copying Nvidia to make it easier for people to figure out how AMD cards compare. Basically, they're saying the RX 9070 series is a competitor to the RTX 5070 series, though whether that means for both performance and pricing remains to be seen. Secondly, they said the 9000 series name was chosen to align with their CPU series and also because 8000 series GPU branding is being used for mobile designs with RDNA3.5.

The name doesn't really matter too much, to be honest. What matters is whether AMD can deliver a strong GPU with competitive features and great value. Renaming their lineup so it looks more like an Nvidia card isn't the key to more sales. The key is creating a product similar to whatever the RTX 5070 ends up being, justifying the matched name, and then smashing Nvidia down with better pricing and value.

FSR 4 = Machine-learning-based upscaling

AMD also announced a couple of new features coming with RDNA4 GPUs. There's "Adrenalin AI", okay... Then the much bigger and more important feature for gamers, FSR 4, which is finally moving to machine-learning-based upscaling. There's not much detail being provided here either (see the pattern?), outside of FSR 4 being developed for RDNA4, and designed for high-quality 4K upscaling.

The hope is that FSR 4's quality will improve to the point of being truly competitive with DLSS, which is more likely to happen with an AI-enhanced algorithm like Nvidia already uses and like Intel uses with XeSS. But we haven't been provided any demonstration of FSR 4, and we don't know when it will launch.

There are a few additional tidbits though. FSR 4 is designed to use the AI accelerators built into RDNA4, but AMD hasn't confirmed whether FSR 4 is exclusive to RDNA4 graphics cards or whether it will be broadly available like FSR 3, which works not only on older AMD GPUs but also with chips from Nvidia and Intel.

AMD says FSR 4 will provide not just an image quality improvement compared to FSR 3, but a performance improvement as well, which is interesting.

We also spotted a rather interesting footnote, which reads: AMD FSR 4 upgrade feature only available on AMD Radeon RX 9070 series graphics for supported games with AMD FSR 3.1 already integrated. This implies that AMD has developed some sort of conversion system that will take a game with FSR 3.1 and automatically upgrade it to FSR 4 when you have an RX 9070 graphics card.

We asked AMD about this strange footnote that doesn't seem related to any of the main points on the slide, and they declined to elaborate or explain this, which is a little unusual. If this feature does exist in the way the footnote seems to suggest, it would be a very solid way to launch FSR 4, given that FSR 3.1 is already available in around 50 games.

Game support is crucial because FSR 4 would not enhance the value of RDNA4 if it took two to three years for it to be supported in a wide number of games. In that sort of scenario, DLSS would still have a huge advantage in game support, and thus would still be worth paying for. But if FSR 4 can launch with decent game support right off the bat, it will go a long way to closing that gap, provided image quality and performance also hold up.

Various board partners will be showing off Radeon RX 9070 models at CES 2025, so we'll hopefully get some hands-on time with them in the coming few days right here in Las Vegas.

New Ryzen 9 9950X3D and Ryzen 9 9900X3D CPUs

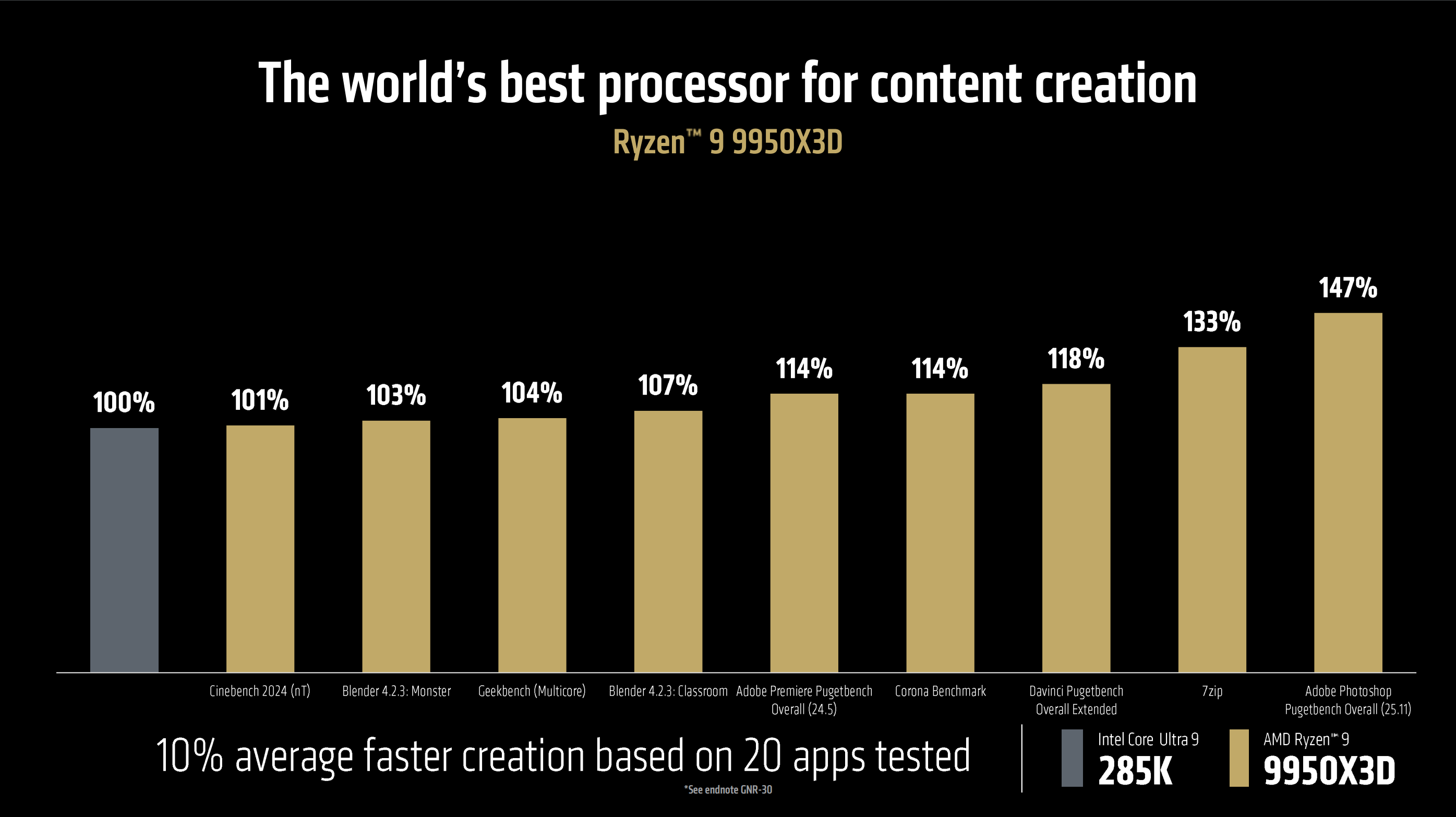

AMD also announced two additional desktop Ryzen 9000 CPUs: the Ryzen 9 9950X3D and Ryzen 9 9900X3D. These are exactly as expected, bringing 2nd-gen 3D V-Cache technology to 16- and 12-core CPUs, providing a single CPU with great performance for gamers and creators.

If you had to guess what the specifications of these CPUs looked like, you'd probably be right.

The Ryzen 9 9950X3D with 16 cores packs 128MB of total L3 cache, 64MB from the original Zen 5 CCDs, and 64MB stacked beneath one of the CCDs – the other CCD remains without V-cache, in a similar design to the 7950X3D. Scheduling is still required to ensure games run on the 8-core CCD with V-cache, while the other CCD is designed to run at the highest possible frequencies – in this case, 5.7 GHz, the same max clock speed as the 7950X3D.

The TDP is also identical to the 7950X3D at 170W, so this is basically a straight Zen 4 to Zen 5 core architecture upgrade.

The 9900X3D, the 12-core model, is also what you'd expect: 128MB of L3 cache and up to a 5.5 GHz max boost frequency, 100 MHz lower than the 7900X3D. The TDP remains the same at 120W. No pricing was given for either of these CPUs, and AMD has only said they will be available in Q1 of 2025.

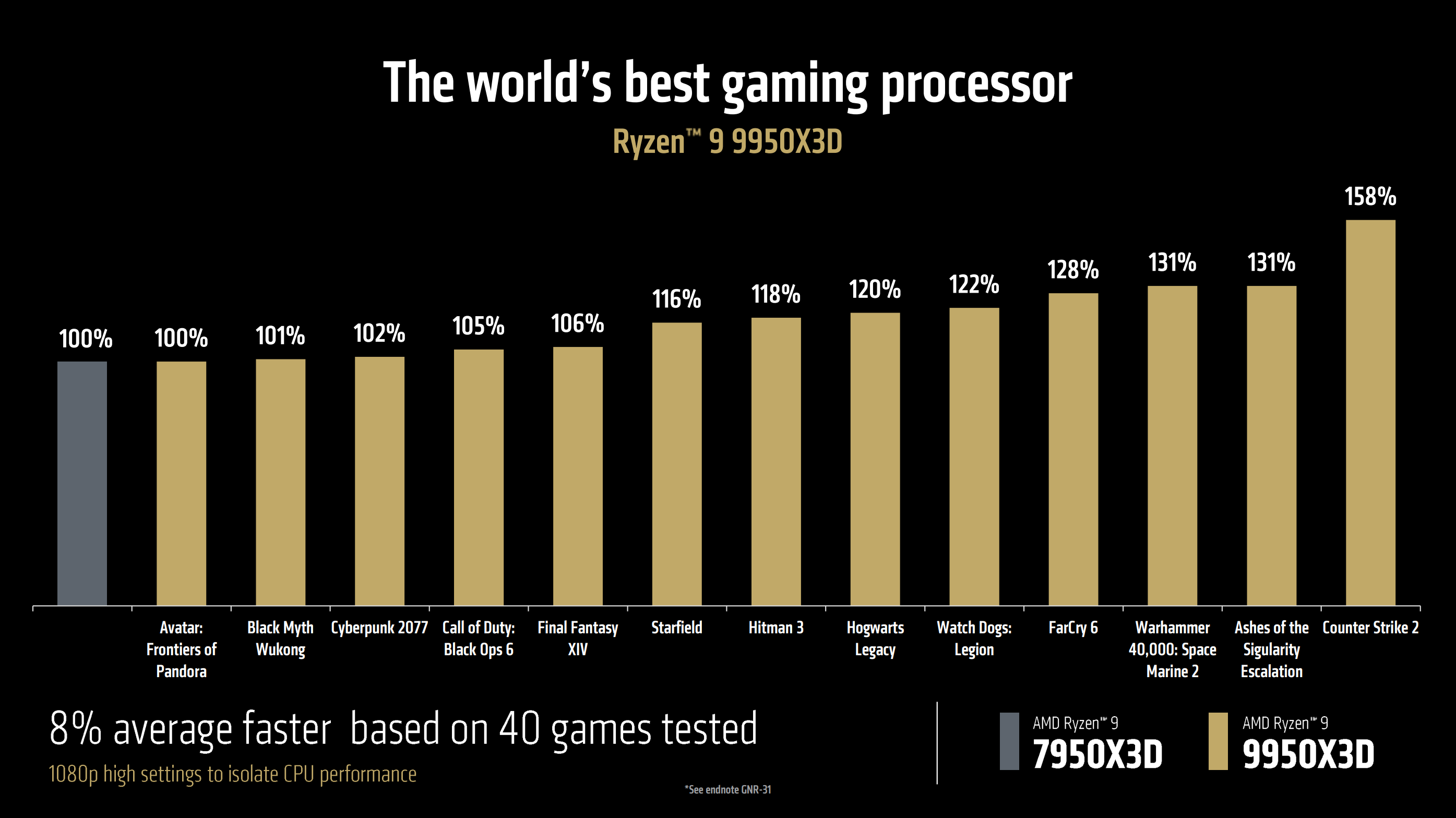

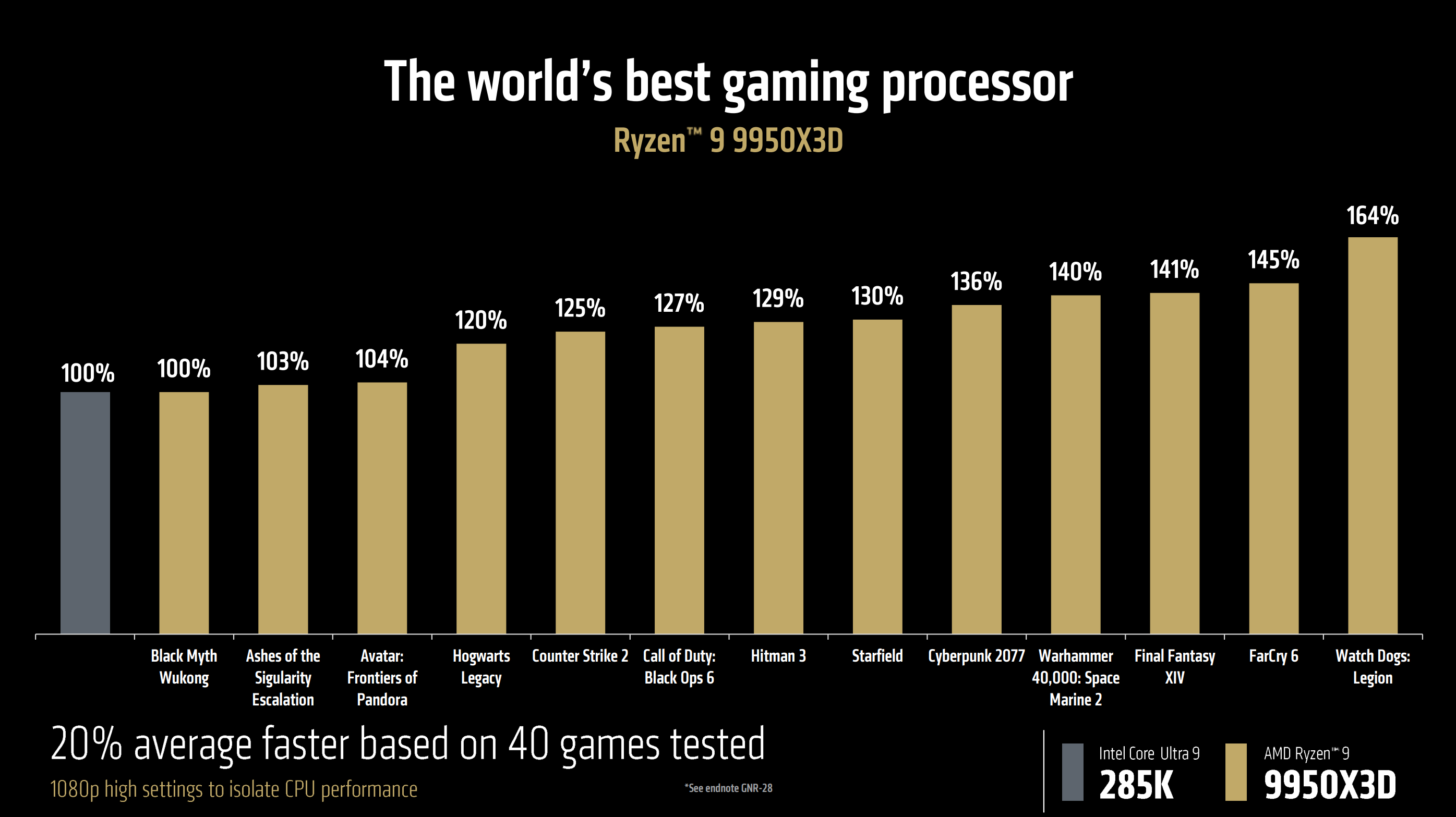

As for performance, AMD claims in their own benchmarks that the 9950X3D will deliver performance roughly equivalent to the 9800X3D in games, within 1%. This means an 8% improvement compared to the 7950X3D in AMD's testing across 40 titles at 1080p high settings – which, again, AMD says is similar to how the 9800X3D compared to the 7800X3D. Relative to the 285K, AMD believes the 9950X3D should be 20% faster in games.

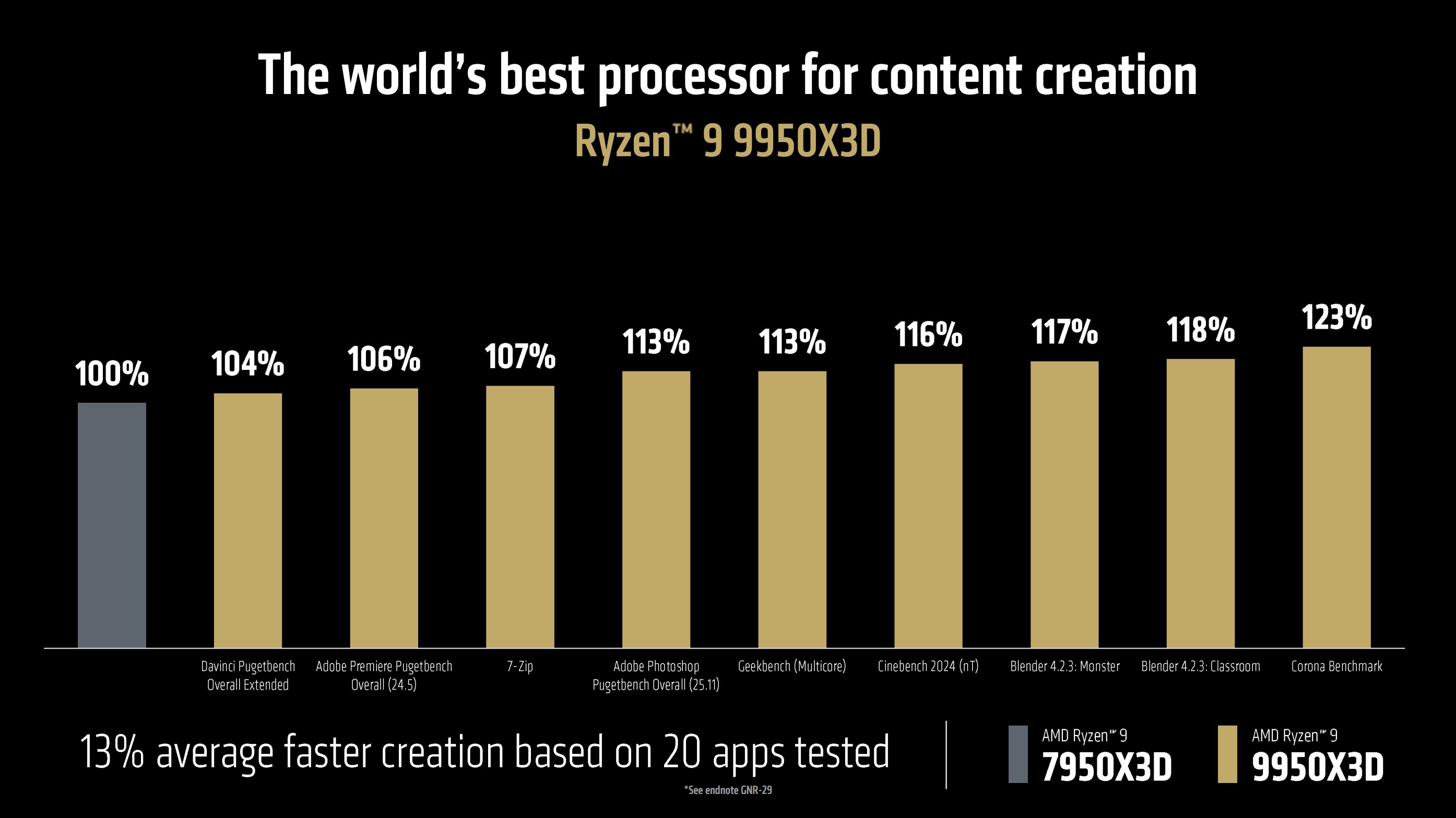

In productivity apps and creator workloads, AMD is quoting a 13% increase in performance relative to the 7950X3D across 20 apps and a 10% increase relative to the Core Ultra 9 285K. This is why AMD believes the 9950X3D will be the best part for both gamers and creators, as it offers both better gaming and better productivity performance than the 285K in their testing. Of course, we'll have to verify whether that's true in our full review when the parts launch, so stay tuned for that.

Other AMD announcements at CES 2025 relate to mobile parts, which we'll be covering shortly, including new laptop hardware and chips for gaming handhelds. There are tons of mentions of AI, too, because of course, that's the trend. Some of the laptop CPUs even have AI in the name, like the AMD Ryzen AI Max+ Pro 395, which is a real name for a real product.

That was more of a teaser from AMD than a full product launch, but all of these new products are not too far away. We should learn more about RDNA4 in particular shortly. Stay tuned for more CES 2025 coverage here on TechSpot.

AMD reveals RDNA4 architecture, Radeon RX 9070 GPUs, and Ryzen 9000 X3D CPUs